- docker

- knowlege-backup

- networking

- admin

- hosting

My homelab is the unfeeling brain of my home. Without it we would survive, but boy would we miss some things. Two of the most important are Homeassistant and Pihole.

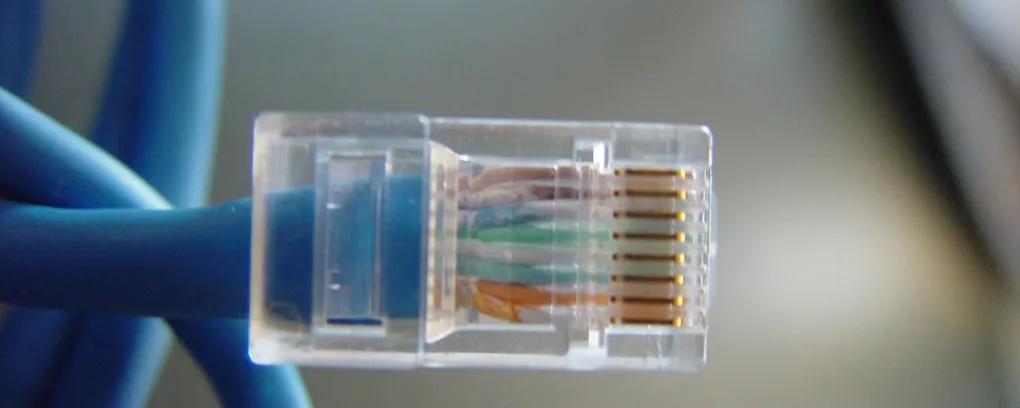

A few months ago I was troubleshooting some DNS issues on my internal LAN. More often than I would like, DNS was super slow and local name resolution was either not working at all, or working randomly.

My DNS is setup so that local clients should ask Pi-Hole for the address first. If Pi-Hole does not have the address on a block list and does not have it cached, it hits Unbound DNS. Pi-Hole lives on my NAS in a docker container and Unbound is a service on my edge router running OPNsense. I register any local names on OPNsense, allowing Pi-Hole to focus on blocking and caching.

My issue is that exposing port 53 for both TCP and UDP was not reliable. Pi-Hole’s official documentation recommends assigning NET_ADMIN as a capability for Pi-Hole. NET_ADMIN grants broad control over the network stack, and I wasn’t comfortable giving Pi-Hole those elevated privileges just to bind DNS ports.

For Home Assistant, I had purchased a Tempest weather station, which is wonderful because it can operate fully locally. It broadcasts the data over multicast UDP. Which is a little weird, but also allows anything you wish to have locally read the data. Since Home Assistant was on its own docker network it was unable to listen to these UDP packets and find the device.

Enter ipvlan. By default, Docker isolates containers in their own network, which blocks multicast and broadcast packets from reaching them. ipvlan lets containers get real IP addresses directly on your physical LAN instead, so they can receive all the network traffic they need. Since I use OpenTofu, in my .tf config I created the network:

resource "docker_network" "vlan" {

name = "ipvlan"

driver = "ipvlan"

ipam_config {

subnet = "10.49.1.0/24"

gateway = "10.49.1.1"

ip_range = "10.49.1.0/24" # optional

}

options = {

parent = "enp2s0f0"

}

} Then in any container where I would like it to have a real IP address, I added:

networks_advanced {

name = docker_network.vlan.name

ipv4_address = "10.49.1.99"

}Updated 2026-01-27: Be sure to keep your subnet updated to match your network topology or you might find weird issues down the road

Now instead of assigning my NAS’s primary IP as DNS I was able to assign 10.49.1.99 and Pi-Hole had full access to the network over UDP, multicast, and TCP. By doing the same for Home Assistant I was not only able to discover and easily add my Tempest device, but I also found that using Chromecast devices was much more stable.

While this does expose the container more directly to the network, it removes the security hole that granting a service NET_ADMIN permissions opens up, only exposing the container to the network instead of giving the container the right to modify your networks.

I still access the web interfaces for both these services via proxy; this gives me SSL and allows me to restrict access to only local network devices, either on my physical LAN or via Wireguard.

Notes:

enp2s0f0is a secondary network device on my NAS. You can use any physical network device on your machine. I happened to have a second network device that was barely utilized.- Technically both services can be accessed via their web interface over their assigned IP. Ideally I should probably set up firewall rules to block that completely. It would be trivial to do this with

ufw. - You can also mark the vlan as internal and tag the traffic for 802.1Q isolation. I have not used this, but it is on the “want to explore” list.